Avoiding Unnecessary Work

… continued from the previous post.

Cancelation

Assume we have only a limited amount of time and want to use the data we have until this point. While we could build our own solution, but Go has context.WithTimeout since version 1.7.

Let us modify our Fibonacci function to use a context:

| |

We see some of elemts we were missing from the list of concurrency building blocks: Cancelation and error handling. Also note that checking for cancelation often will have a noticeable performance impact, we accept that for clearness and demonstration purposes.

Now we must adapt main, too:

| |

Running this gives:

> go run fillmore-labs.com/blog/goroutines/cmd/try6

*** Finished 45 runs (4 failed) in 107ms - avg 11.1ms, stddev 3.55ms

While we see a performance hit due to checking for cancelation too often and a not overly precise timer, the result is pretty satisfactory.

> go test -trace trace6.out fillmore-labs.com/blog/goroutines/cmd/try6

ok fillmore-labs.com/blog/goroutines/cmd/try6 1.403s

> go tool trace trace6.out

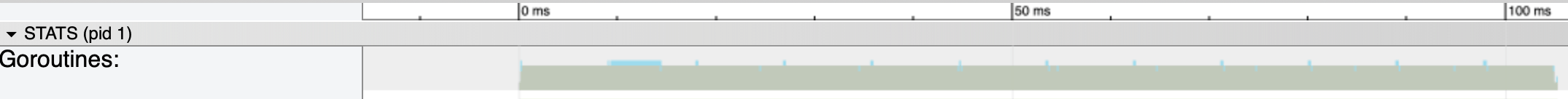

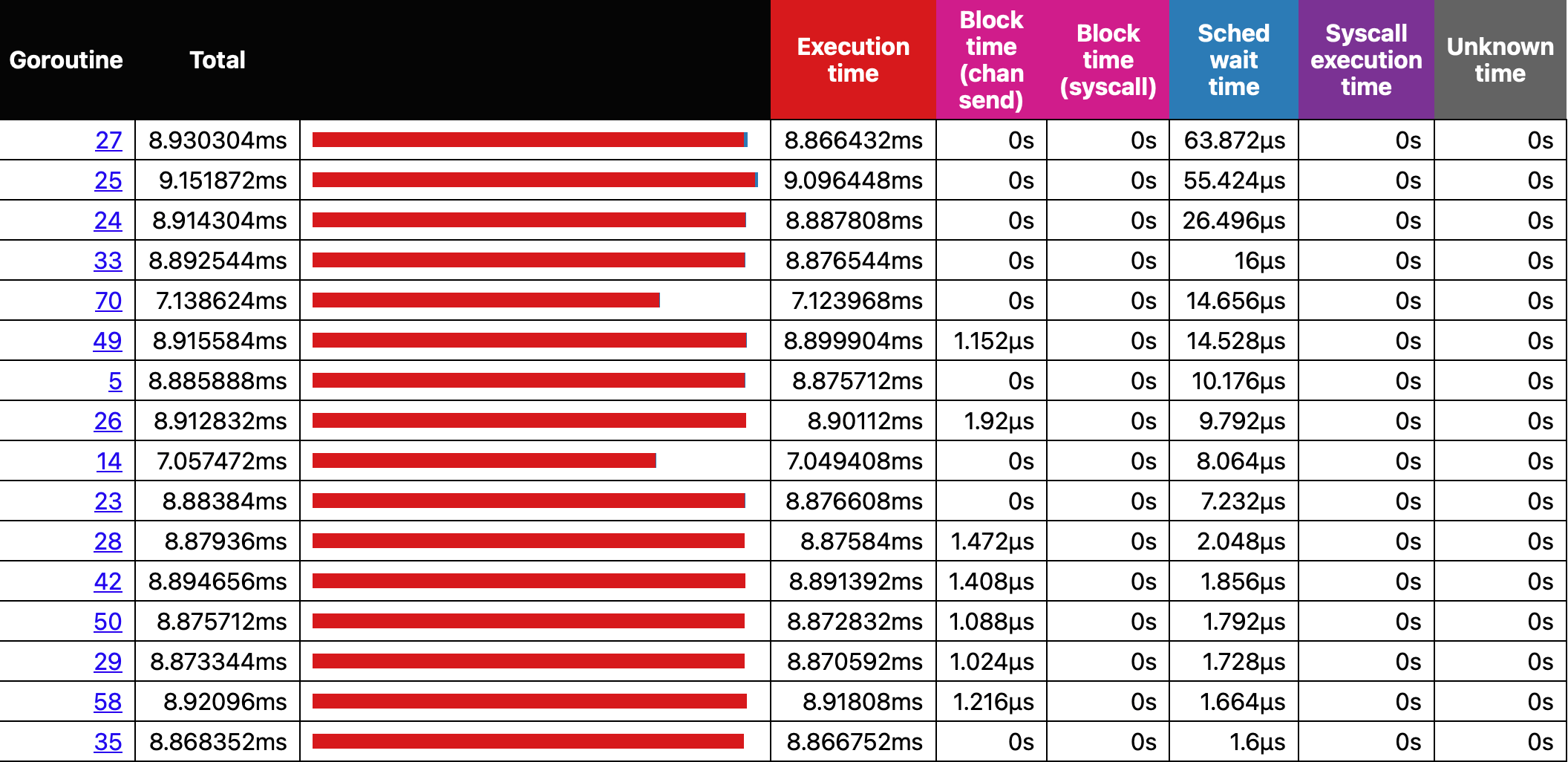

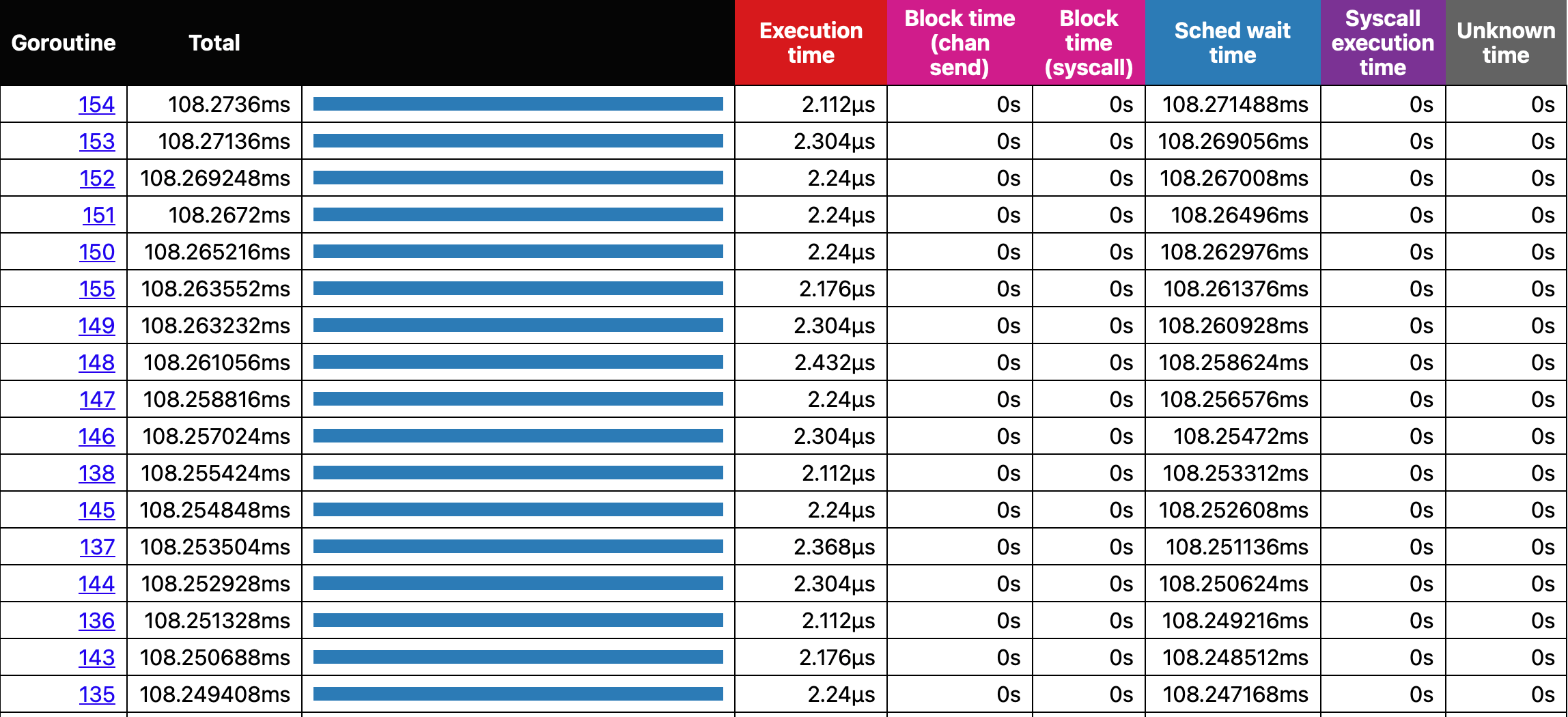

Also, most goroutines are busy processing:

and we are not blocked long waiting for other parts of the program (our runtime measurement):

If we modify the semaphore pool size to be 1,000 instead of runtime.NumCPU() we get results like:

*** Finished 48 runs (952 failed) in 108ms - avg 57.8ms, stddev 30.6ms

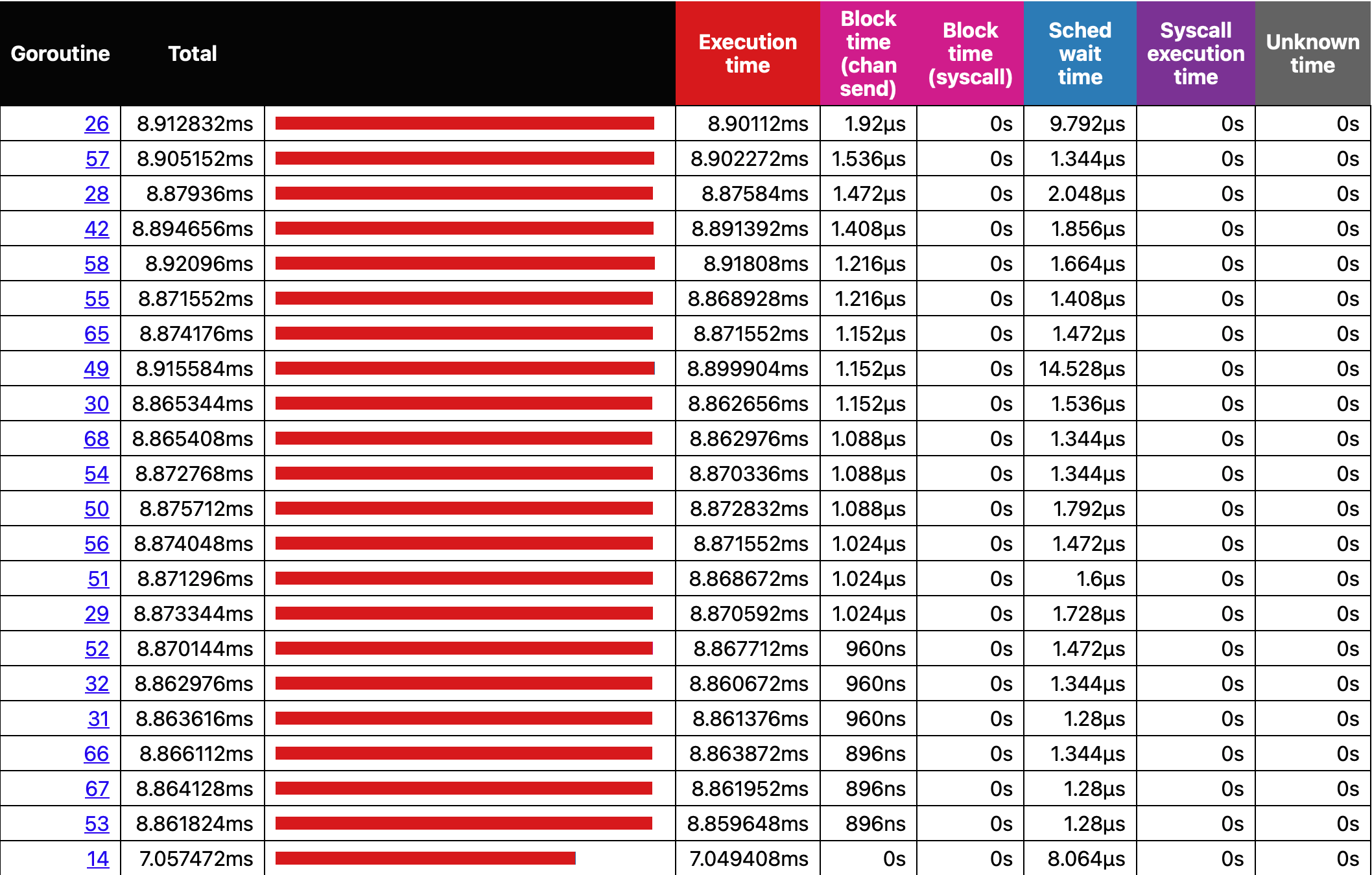

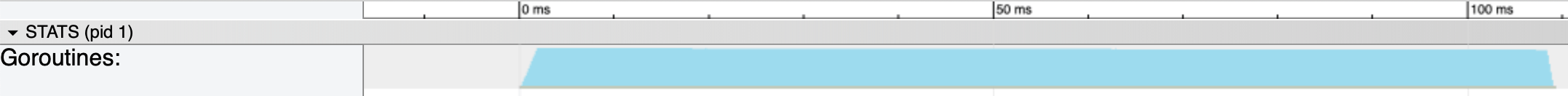

We build up lots of unnecessary goroutines in the beginning which just hang around until canceled:

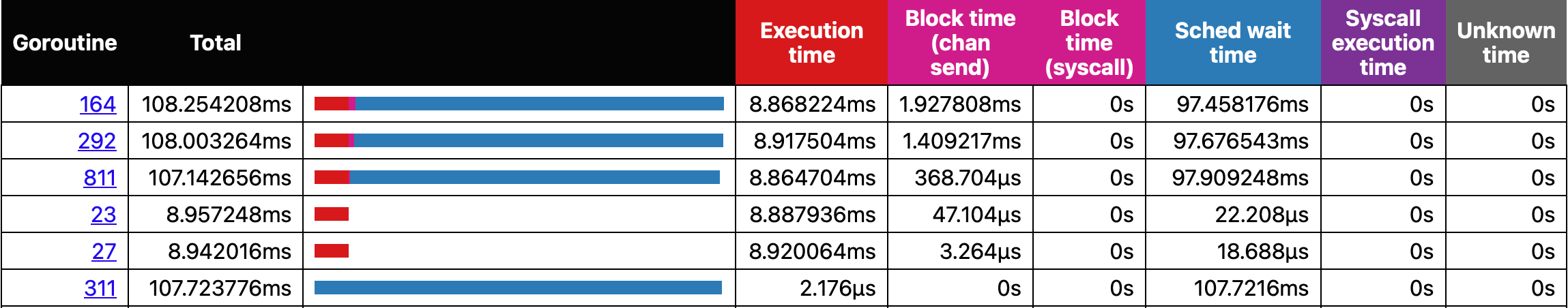

This is also visible in the goroutine analysis:

And some blocking for the few routines that manage to finish:

Summary

We introduced the concept of cancelation and error handling, so that we can limit the amount of work done when we are no longer interested in the result, for example because another part of the task failed or some deadline timed out.

… continued in the next post.