Using Goroutines Will Not Grant You Another CPU Core

… continued from the previous post.

Back to the Drawing Board

Let us keep the original Slow implementation and move parallelization to

main:

| |

Now check if things improved:

> go run fillmore-labs.com/blog/goroutines/cmd/try4

*** Finished 1000 runs in 371ms - avg 185ms, stddev 106ms

This is approximately 3.85 times faster than the single-core solution, which is what we would expect on a four-core machine.

We could stop here, but some numbers immediately jump out. We measure the time between the begin of the request (the start of the goroutine) and when the calculation is done.

While the single-core solution needed 1.47 milliseconds for one calculation, our latest program makes us wait on average 185 milliseconds for the result. Also, the response times vary wildly, with over 100 milliseconds of standard deviation.

Let us diagnose why this is:

> go test -trace trace4.out fillmore-labs.com/blog/goroutines/cmd/try4

ok fillmore-labs.com/blog/goroutines/cmd/try4 0.374s

> go tool trace trace4.out

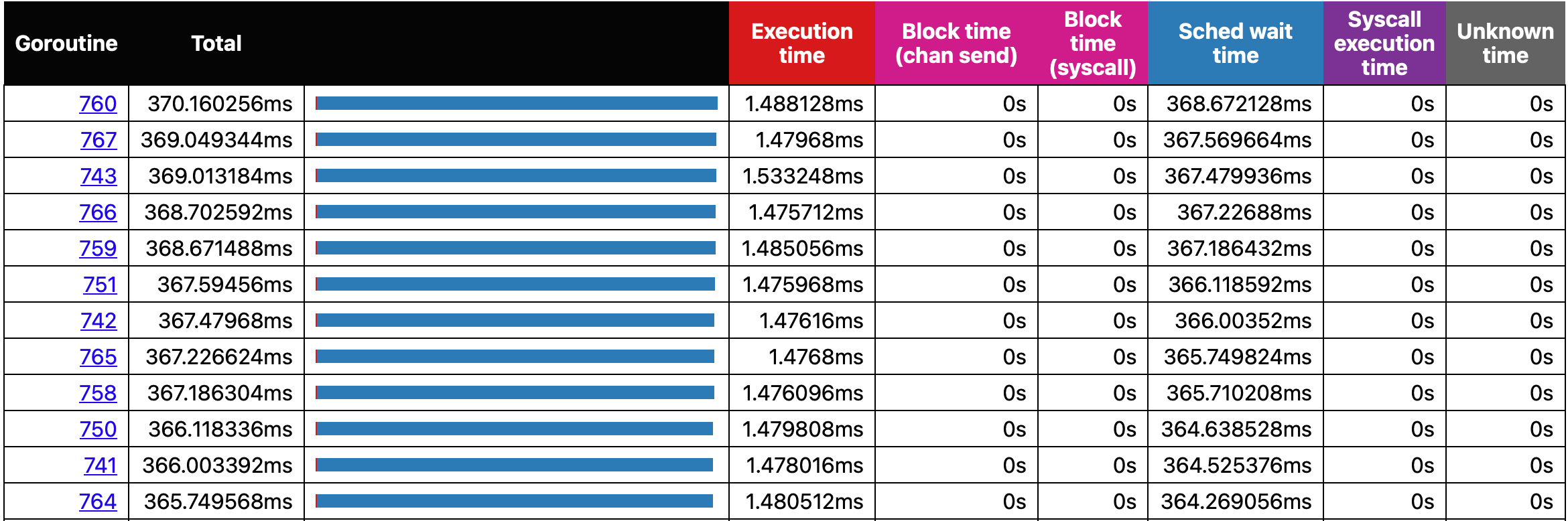

Examining the goroutine analysis of fillmore-labs.com/blog/goroutines/cmd/try4.Run4.func1:

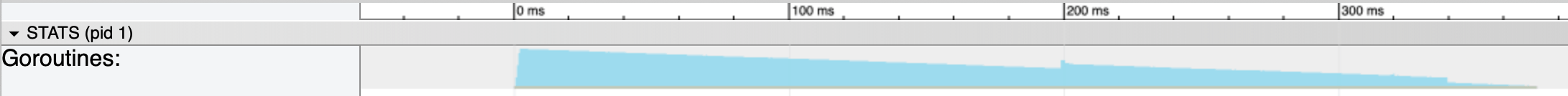

We can seed that we spawn a lot of goroutines that are are mostly waiting to be scheduled and it takes the scheduler a while to finish all of them:

The Go scheduler is good and has implemented some interesting prioritization tricks, so there is little penalty for this build-up, but we make scheduling bad for us and any other part of our application.

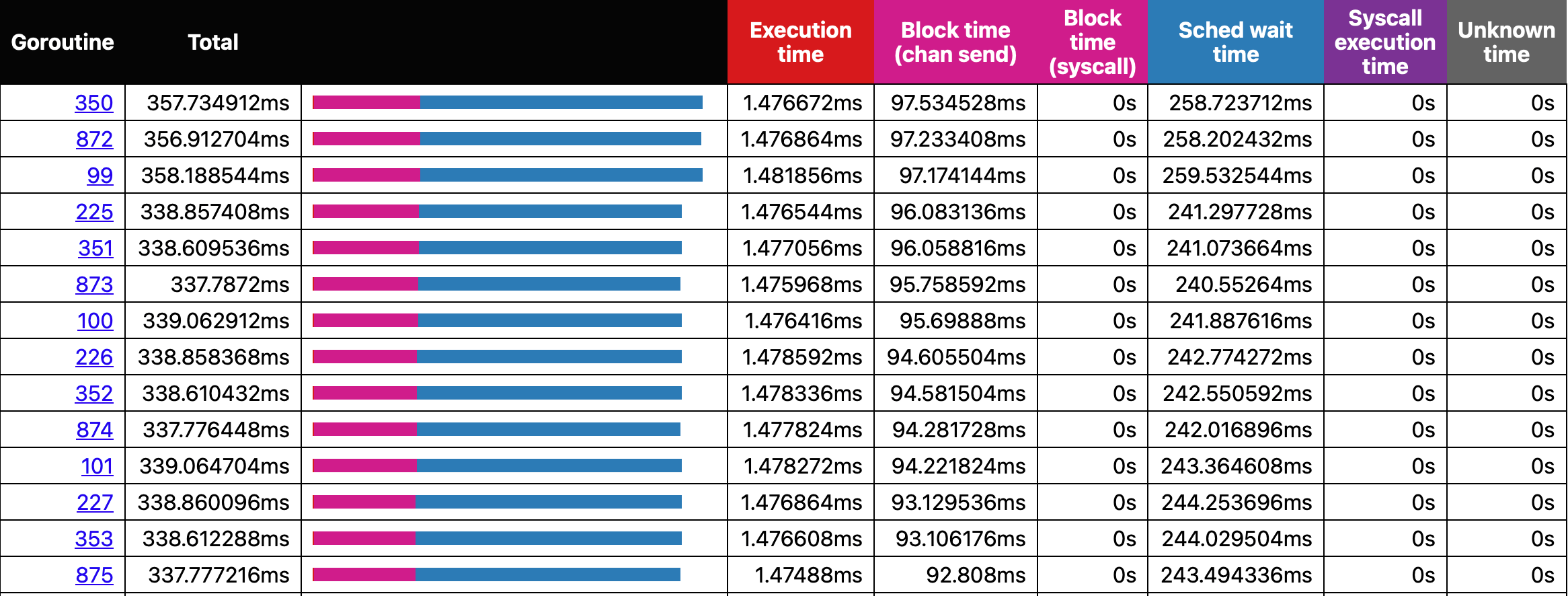

If we look at the block times:

We see the runtime calculations blocking, since we use a channel to send the duration to another goroutine, so placing unnecessary load on the scheduler affects the whole program. Also, we are just wasting RAM by creating workloads that can not be executed.

Do Not Commission More Work Than Can Be Done

Let us modify the way we schedule our calculations. Instead of submitting them all at once, we use a semaphore to only submit as many goroutines as can be executed:

| |

Code-wise it looks remarkably like the solution using wait groups. Now try this out:

> go run fillmore-labs.com/blog/goroutines/cmd/try5

*** Finished 1000 runs in 372ms - avg 1.85ms, stddev 445µs

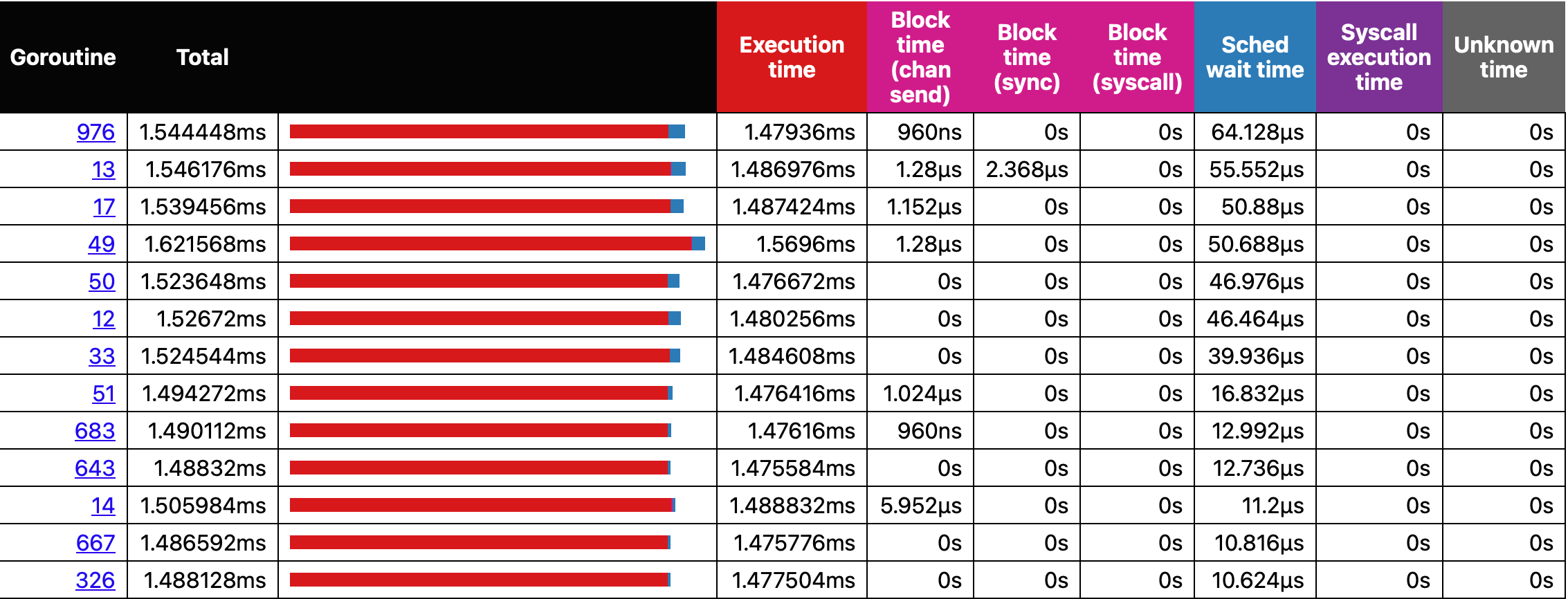

It has nearly the same total runtime as our last attempt, but the requests are much more responsive (a query is calculated after 1.85 Milliseconds, which is close to our single-core result of 1.45 Milliseconds) and much less variance than before. Let us look at the trace of that:

> go test -trace trace5.out fillmore-labs.com/blog/goroutines/cmd/try5

ok fillmore-labs.com/blog/goroutines/cmd/try5 0.374s

> go tool trace trace5.out

We see that the goroutines spend time executing code, instead of just waiting to be scheduled. Also, we have only four goroutines running at a time:

So, this surely is an improvement.

Summary

We studied some ways to parallelize a CPU bound algorithm so that it efficiently uses all CPU cores without swamping the Go scheduler. We also saw that randomly using goroutines has a good chance to make a program run slower than before.

Interestingly enough the synchronous calculation of a single task is faster that the parallel one, and moving concurrency out of the calculation sped things up tremendously.

… continued in the next post.